Tech Debt vs Investment

Debt makes things worse over time, Investment makes things better over time.

What is Debt ?

When you borrow money from a bank, the bank usually charges some form of interest. E.g if you borrow $100,000 with 5% monthly compounding interest and pay back after 5 years, you end up paying $128,335. If you pay it back in 10 years, you end up paying $164,700. Over time compounding interest gets worse if you don’t pay it back.

If you invest in the stock market on a zero interest mutual fund like FNILX, which is a blend of top 500 US companies (like S&P 500), it has on average delivered >10% YoY returns. If you invest $100,000 with 10% compounding yearly growth, after 5 years it grows to $161,500 and after 10 years at $259,000. Over time, money makes more money. This is also how the rich get richer since they own assets that grow in value. They don’t trade time for money.

So debt and investment are two sides of the same coin. One gets increasingly worse over time, one gets increasingly better over time without effort on your part. You can obviously take a loan at a lower interest rate and invest it in something that yields a higher growth rate. That trade off is worth it, you make more money with someone else’s money. 🥳

What is Tech Debt?

Is skipping on tests tech debt? Should you spend a week getting 100% coverage instead of shipping a feature? Is skipping on performance considered debt? Is old code debt? what is debt?

The mental framework I have come to is “If X is left unchecked and more things are layered on top of X, does it get worse over time? If true, then X it is debt”. That is the litmus test.

Inversely, if the foundations are built on the right invariants, things get increasingly better due to the power of primitives. It costs a lot less to re-use than to build something from scratch. “If more things are layered on top of Y, does it get better over time? If so that is a good investment”.

One nice property of software, is that it is malleable. You can pay down debt and turn it into an investment. Just like you can pay down a house and rent it out.

Examples

Tests

When I was at Mixpanel, one particularly hairy UI component was the chrome-header. Every now and then it would regress. It was one huge js file, it wasn’t typed in Typescript and the original authors had left the company. Every couple of changes would break an obscure scenario and we’d have to revert. As more engineers joined and touched it, new code was added that worked around the quirks of old code. “Over time things got worse building on the existing layers”.

We refactored into smaller pieces all written in Typescript with good contracts between them and started with just one test “does it load without errors? That was a turning point. As other engineers touched the code they’d follow conventions and also type their code, they’d extend the test suite and add assert new behavior. It was the turning point from debt -> investment. As more code was added, the chrome header got increasingly better. It is one of the most comprehensively tested components now. “Over time things got better building on the refactored layers”

Smoke Tests

In my experience, most sites tend to be stable over holidays than weekdays. Most incidents are self inflicted. When I joined deploying was a scary experience. You had to manually check a page isn’t broken. As the product surface area grew, it wasn’t possible to check all the different parts of the product. it’d take hours to do that. “Things got worse over time”.

We built a puppeteer based test suite that would hit staging and load a bunch of urls through Chromium browser. It would check that there are all network requests load under 30seconds, no network errors, no javascript errors. The same suite would be run pre-deploy and post deploy. We had flakiness issues and added filters so 3rd party scripts would not fail deploys. That was a turning point of debt -> investment.

We could now detect all sorts of issues before going to production and save potential incidents. Adding a new test was as simple as adding a url in a file. “Things got better over time”, smoke-tests would catch all sorts of issues while refactoring. Deploying didn’t feel scary. We deployed >1000x a month with confidence.

Design System

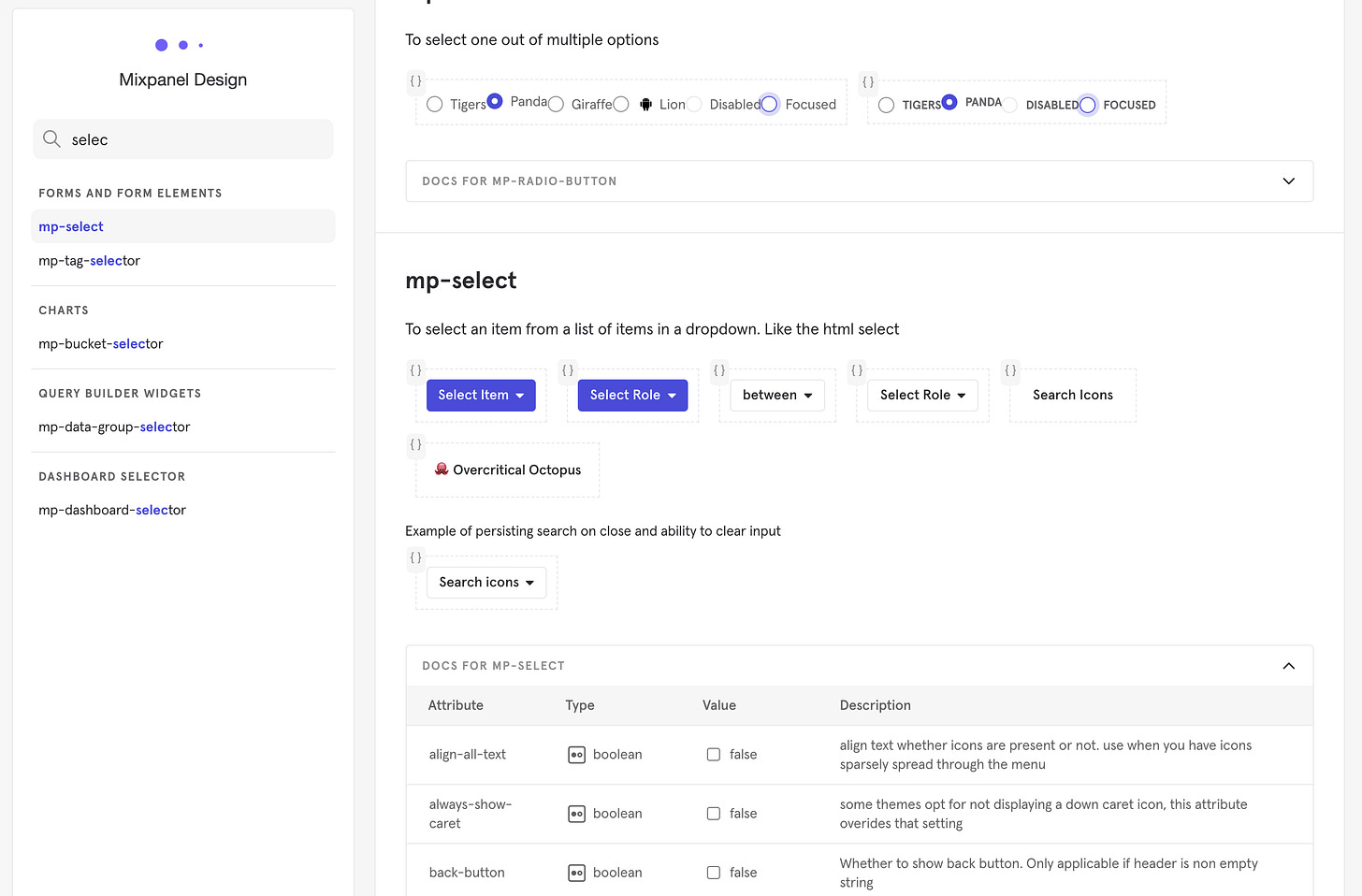

In a startup, you don’t have the luxury of time. So naturally the best way to move faster is to parallelize teams and give them full autonomy. The tradeoff is that teams can have shared problems and end up re-inventing solutions in different ways. This is great when you want to get something in the hands of customers ASAP, but not great when you have to maintain 5 different ways of displaying a menu. Every couple of months we’d have a new implementation of menus, buttons, tables and other UI components. “Over time things got worse building on the existing layers”.

Although there was a design system, it was out of date. Around 2019 there was a wave of new designer hires and the sentiment changed. They changed their stack to Figma and heavily prioritized Components.

The lessons was that the friction was documentation. No one liked creating new menus, but it was hard to find what is re-usable. The principle of least friction meant creating a new menu was easier than re-using an existing one which was too coupled with the code that was using it.

The newer Design system (design.mixpanel.com) prioritized simplicity and autogeneration. It was 10% the size of original code. No manual upkeep to keep attribute documentation in sync. Adding a new component was a couple of lines of code. Refactoring existing UI code was cheaper, components exposed a clear typed boundary. The number of components in design system rose year over year. “Over time things got better building on the refactored layers”.

Rotation

The single most effective thing I have seen work to tackle tech debt is the idea that you allocate time to fix the problem via a team rotation. It has to be part of a routine. Incremental refactors in my experience have been more successful than stop the world refactors. (Of course there are tradeoffs)

Example: If you have a team of 6 members, every week someone stops doing any feature work and focuses on responding to incidents + paying down debt. The tasks are in a prioritized backlog. There is some upfront discussion on what is important and what layers ought to change. However engineers have to be given full autonomy to fix what is getting in their way and build good layers.

An alternative strategy is to allocate at-least one person who’s responsibility it is to drive it. Most problems don’t get fixed because of the “Somebody, anybody, nobody” problem.

The other side of coin is when you end up in endless refactoring. Refactoring that feels good but doesn’t solve a real problem. This usually more prevalent in large companies than smaller startups. At smaller startups you have less layers of management, which means engineers are closer to customers and have a bit more leverage and being able fix what gets in their way to get the customer’s job done.

One of the priorities for Recurrency is to focus on the internal reusability of logic/flows/components, with the goal of developing an internal library for anything we need three times.

The problem we're solving is ERP stagnation, which I see as an outgrowth of companies not investing over time. In short, the core operational infrastructure of 100,000s of businesses is so buried in technical debt that innovation is impossible. Our long-term challenge is to build the better system without falling into the same traps.

A book I'm always glad I read cover-to-cover (even though it says it's probably better to use it as reference) is Code Complete. Part V of the book is aptly titled "Code Improvements" and in that part there are two things I am reminded of reading this post: "the characteristics of software quality" (Section 20.1 in the 2nd edition of the book) and refactoring from an initial state of messy chaos to an ideal state.

There are 8 characteristics of software quality, and an adjacency matrix (figure 20-1) showing how improving upon one characteristic will likely affect other characteristics (for example: improving efficiency often correlates with a decrease in correctness, reliability, integrity, adaptability, and/or accuracy to pick one of the less synergistic of the 8 characteristics).

And the refactor one. Well, the main thing that stays in my brain is figure 24-3, which gives a metaphor for chaotic code written as a result of the messy reality we live in, and then an ideal final state where only the code that interfaces with the real world is chaotic and messy, and it is cleaned before it is served to the rest of the clean, trusted code.